Gzip, Bzip2 and XZ are all popular compression tools used in UNIX based operating systems, but which should you use? Here we are going to benchmark and compare them against each other to get an idea of the trade off between the level of compression and time taken to achieve it.

For further information on how to use gzip, bzip2 or xz see our guides below:

The Test Server

The test server was running CentOS 7.1.1503 with kernel 3.10.0-229.11.1 in use, all updates to date are fully applied. The server had 4 CPU cores and 16GB of available memory, during the tests only one CPU core was used as all of these tools run single threaded by default, while testing this CPU core would be fully utilized. With XZ it is possible to specify the amount of threads to run which can greatly increase performance, for further information see example 9 here.

All tests were performed on linux-3.18.19.tar, a copy of the Linux kernel from kernel.org. This file was 580,761,600 Bytes in size prior to compression.

The Benchmarking Process

The linux-3.18.19.tar file was compressed and decompressed 9 times each by gzip, bzip2 and xz at each available compression level from 1 to 9. A compression level of 1 indicates that the compression will be fastest but the compression ratio will not be as high so the file size will be larger. Compression level 9 on the other hand is the best possible compression level, however it will take the longest amount of time to complete.

There is an important trade off here between the compression levels between CPU processing time and the compression ratio. To get a higher compression ratio and save a greater amount of disk space, more CPU processing time will be required. To save and reduce CPU processing time a lower compression level can be used which will result in a lower compression ratio, using more disk space.

Each time the compression or decompression command was run, the ‘time’ command was placed in front so that we could accurately measure how long the command took to execute.

Below are the commands that were run for compression level 1:

time bzip2 -1v linux-3.18.19.tar time gzip -1v linux-3.18.19.tar time xz -1v linux-3.18.19.tar

All commands were run with the time command, verbosity and the compression level of -1 which was stepped through incrementally up to -9. To decompress, the same command was used with the -d flag.

The versions pf these tools were gzip 1.5, bzip2 1.0.6, and xz (XZ Utils) 5.1.2alpha.

Results

The raw data that the below graphs have been created from has been provided in tables below and can also be accessed in this spreadsheet.

Compressed Size

The table below indicates the size in bytes of the linux-3.18.19.tar file after compression, the first column numbered 1..9 shows the compression level passed in to the compression tool.

| gzip | bzip2 | xz | |

|---|---|---|---|

| 1 | 153617925 | 115280806 | 105008672 |

| 2 | 146373307 | 107406491 | 100003484 |

| 3 | 141282888 | 103787547 | 97535320 |

| 4 | 130951761 | 101483135 | 92377556 |

| 5 | 125581626 | 100026953 | 85332024 |

| 6 | 123434238 | 98815384 | 83592736 |

| 7 | 122808861 | 97966560 | 82445064 |

| 8 | 122412099 | 97146072 | 81462692 |

| 9 | 122349984 | 96552670 | 80708748 |

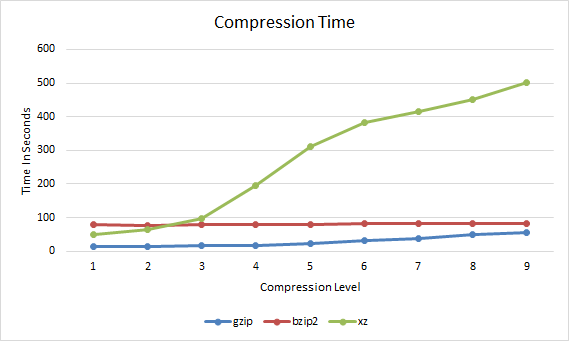

Compression Time

First we’ll start with the compression time, this graph shows how long it took for the compression to complete at each compression level 1 through to 9.

| gzip | bzip2 | xz | |

|---|---|---|---|

| 1 | 13.213 | 78.831 | 48.473 |

| 2 | 14.003 | 77.557 | 65.203 |

| 3 | 16.341 | 78.279 | 97.223 |

| 4 | 17.801 | 79.202 | 196.146 |

| 5 | 22.722 | 80.394 | 310.761 |

| 6 | 30.884 | 81.516 | 383.128 |

| 7 | 37.549 | 82.199 | 416.965 |

| 8 | 48.584 | 81.576 | 451.527 |

| 9 | 54.307 | 82.812 | 500.859 |

So far we can see that gzip takes slightly longer to complete as the compression level increases, bzip2 does not change very much, while xz increases quite significantly after a compression level of 3.

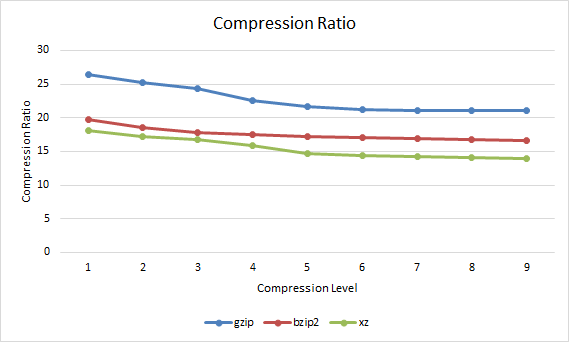

Compression Ratio

Now that we have an idea of how long the compression took we can compare this with how well the file compressed. The compression ratio represents the percentage that the file has been reduced to. For example if a 100mb file has been compressed with a compression ratio of 25% it would mean that the compressed version of the file is 25mb.

| gzip | bzip2 | xz | |

|---|---|---|---|

| 1 | 26.45 | 19.8 | 18.08 |

| 2 | 25.2 | 18.49 | 17.21 |

| 3 | 24.32 | 17.87 | 16.79 |

| 4 | 22.54 | 17.47 | 15.9 |

| 5 | 21.62 | 17.22 | 14.69 |

| 6 | 21.25 | 17.01 | 14.39 |

| 7 | 21.14 | 16.87 | 14.19 |

| 8 | 21.07 | 16.73 | 14.02 |

| 9 | 21.06 | 16.63 | 13.89 |

The overall trend here is that with a higher compression level applied, the lower the compression ratio indicating that the overall file size is smaller. In this case xz is always providing the best compression ratio, closely followed by bzip2 with gzip coming in last, however as shown in the compression time graph xz takes a lot longer to get these results after compression level 3.

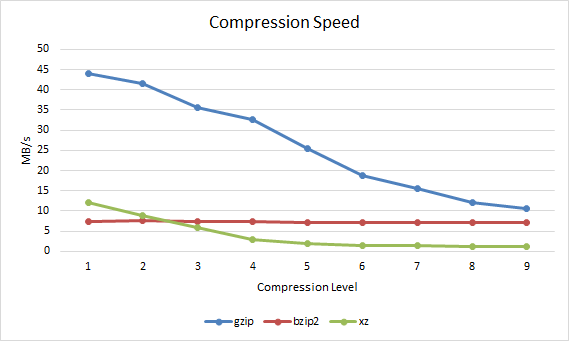

Compression Speed

The compression speed in MB per second can also be observed.

| gzip | bzip2 | xz | |

|---|---|---|---|

| 1 | 43.95 | 7.37 | 11.98 |

| 2 | 41.47 | 7.49 | 8.9 |

| 3 | 35.54 | 7.42 | 5.97 |

| 4 | 32.63 | 7.33 | 2.96 |

| 5 | 25.56 | 7.22 | 1.87 |

| 6 | 18.8 | 7.12 | 1.52 |

| 7 | 15.47 | 7.07 | 1.39 |

| 8 | 11.95 | 7.12 | 1.29 |

| 9 | 10.69 | 7.01 | 1.16 |

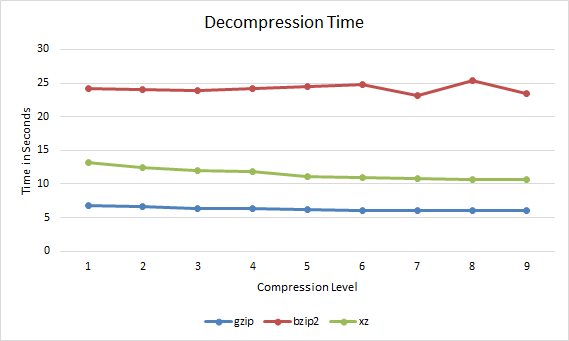

Decompression Time

Next up is how long each file compressed at a particular compression level took to decompress.

| gzip | bzip2 | xz | |

|---|---|---|---|

| 1 | 6.771 | 24.23 | 13.251 |

| 2 | 6.581 | 24.101 | 12.407 |

| 3 | 6.39 | 23.955 | 11.975 |

| 4 | 6.313 | 24.204 | 11.801 |

| 5 | 6.153 | 24.513 | 11.08 |

| 6 | 6.078 | 24.768 | 10.911 |

| 7 | 6.057 | 23.199 | 10.781 |

| 8 | 6.033 | 25.426 | 10.676 |

| 9 | 6.026 | 23.486 | 10.623 |

In all cases the file decompressed faster if it had been compressed with a higher compression level. Therefore if you are going to be serving out a compressed file over the Internet multiple times it may be worth compressing it with xz with a compression level of 9 as this will both reduce bandwidth over time when transferring the file, and will also be faster for everyone to decompress.

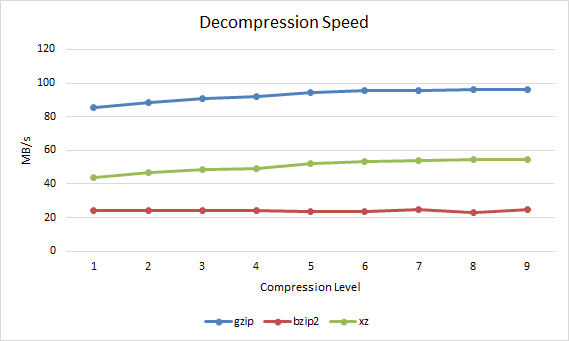

Decompression Speed

The decompression speed in MB per second can also be observed.

| 1 | 85.77 | 23.97 | 43.83 |

|---|---|---|---|

| 2 | 88.25 | 24.1 | 46.81 |

| 3 | 90.9 | 24.24 | 48.5 |

| 4 | 91.99 | 24 | 49.21 |

| 5 | 94.39 | 23.7 | 52.42 |

| 6 | 95.55 | 23.45 | 53.23 |

| 7 | 95.88 | 25.03 | 53.87 |

| 8 | 96.26 | 22.84 | 54.4 |

| 9 | 96.38 | 24.72 | 54.67 |

Performance Differences and Comparison

By default when the compression level is not specified, gzip uses -6, bzip2 uses -9 and xz uses -6. The reason for this is pretty clear based on the results. For gzip and xz -6 as a default compression method provides a good level of compression yet does not take too long to complete, it’s a fair trade off point as higher compression levels take longer to process. Bzip2 on the other hand is best used with the default compression level of 9 as is also recommended in the manual page, the results here confirm this, the compression ratio increases but the time taken is almost the same and differs by less than a second between levels 1 to 9.

In general xz achieves the best compression level, followed by bzip2 and then gzip. In order to achieve better compression however xz usually takes the longest to complete, followed by bzip2 and then gzip.

xz takes a lot more time with its default compression level of 6 while bzip2 only takes a little longer than gzip at compression level 9 and compresses a fair amount better, while the difference between bzip2 and xz is less than the difference between bzip2 and gzip making bzip2 a good trade off for compression.

Interestingly the lowest xz compression level of 1 results in a higher compression ratio than gzip with a compression level of 9 and even completes faster. Therefore using xz with a compression level of 1 instead of gzip for a better compression ratio in a faster time.

Based on these results, bzip2 is a good middle ground for compression, gzip is only a little faster while xz may not really worth it at its higher default compression ratio of 6 as it takes much longer to complete for little extra gain.

However decompressing with bzip2 takes much longer than xz or gzip, xz is a good middle ground here while gzip is again the fastest.

Conclusion

So which should you use? It’s going to come down to using the right tool for the job and the particular data set that you are working with.

If you are interactively compressing files on the fly then you may want to do this quickly with gzip -6 (default compression level) or xz -1, however if you’re configuring log rotation which will run automatically over night during a low resource usage period then it may be acceptable to use more CPU resources with xz -9 to save the greatest amount of space possible. For instance kernel.org compress the Linux kernel with xz, in this case spending extra time to compress the file well once makes sense when it will be downloaded and decompressed thousands of times resulting in bandwidth savings yet still decent decompression speeds.

Based on the results here, if you’re simply after being able to compress and decompress files as fast as possible with little regard to the compression ratio, then gzip is the tool for you. If you want a better compression ratio to save more disk space and are willing to spend extra processing time to get it then xz will be best to use. Although xz takes the longest to compress at higher compression levels, it has a fairly good decompression speed and compresses quite fast at lower levels. Bzip2 provides a good trade off between compression ratio and processing speed however it takes the longest to decompress so it may be a good option if the content that is being compressed will be infrequently decompressed.

In the end the best option will come down to what you’re after between processing time and compression ratio. With disk space continually becoming cheaper and available in larger sizes you may be fine with saving some CPU resources and processing time to store slightly larger files. Regardless of the tool that you use, compression is a great resource for saving storage space.

Hi Jarrod –

Al Wegener here, a serial entrepreneur living near Santa Cruz (Silicon Valley). I’m a compression researcher & inventor, and I wanted to compliment you on your well-written, well-researched comparison between gzip, bzip2, and XZ. If you’re ever in the SF Bay Area, please ping me and let’s have a beer !

Cheers,

Al

Hi Al, thanks a lot for the kind words! I’ll let you know ;)

You did a great job clearly laying out what you found about the various compression programs.

Thanks for the feedback!

Jarrod –

Excellent article. We are doing a research project aimed at medical image (x-ray, CT, etc.) compression, focusing on lossless methods. We pre-process the medical images to optimize compression in commercial or open-source compressors, and also have algorithms that select certain image sub-areas for lossy or lossless compression. From your data, it looks like xz is worth testing (have already tried Bzip and gzip).

Thanks! Yes I’d definitely say XZ is worth a look at.

Consider good points for cutting over – from the results above

gzip 1-7 for fastest compresions then

xz 1-3 better then gzip 8+

then

bzip2 level 4+ for faster harder compressions

or stay with xz for harder compressions

PNG is probably your best bet, lossless image compression. It should be better then generalised compressors

Thank you for this very interesting comparison!

I did some extremely thorough tests of this and found that xz -1 is nearly always a better drop in for gzip -9 with quite impressive gains for little to no increase in cost.

If you run tests on a lot of data then you might notice that xz is a bit special. Sometimes higher compression levels will slightly worsen compression ratio. They turn on differing options at various levels but usually result in a net improvement but don’t guarantee it.

It is also worth noting that the memory usage for xz can be extreme.

As for how bzip2 relates to this I am not sure. xz makes bzip2 irrelevant so I have never bothered with it.

Thanks for the information, I agree, bzip2 does not seem very useful anymore now that xz is around.

This was a rather interesting read and for sure it will be referenced by many for years to come.

However, I wonder if by adding in the parallel compressor/decompressor lbzip2, the conclusions might sway in the favor of it? As far as I know, xz only has parallel compression but not decompression.

There was also a post by Antonio Diaz Diaz concerning the longevity of data compressed by xz vs say bzip2. This would be quite interesting for archivists, but I imagine backups of the original uncompressed data obviates the concern.

http://www.nongnu.org/lzip/xz_inadequate.html

Does anyone have any contrasting opinion with regard to these two points?

You can use xz –threads=0 for solve speed problem

For me with 8 core was a huge difference in time

does this enable xz to use an unlimited number of threads?

plus, is i gues its equivalent to

xz -T0.Excellent work here but I think redefining compression ratio to meet your explanation is a little confusing i.e a representation that compresses a 10MB file to 2MB would yield a space savings of 1 – 2/10 = 0.8, often notated as a percentage, 80% not 20%.

https://en.wikipedia.org/wiki/Data_compression_ratio

Somehow all the benchmarks of xz miss that it also has a “-0” compression level, which is significantly faster than “-1”.

Hiya,

In your conclusion it may be a good idea to under which conditions is it is wise to change compressors.

It seems like if you contemplate using gzip at level 8+ it may be better to switch to xz as it compresses harder at similar speeds. bzip2 seems to be faster at higher compression levels .. so my conclusion

use gzip to compression level 8, then switch to xz for level 1-3, then for anything better switch to bzip2

As someone already said, this would be quite interesting for archivists.

Nice comparison! The share buttons not working for me :-(

Thanks!

This is an excellent article!

The Compression Ratio was very confusing. That’s not what compression ratio means.

I look forward to your expert explanation.

Not confusing, just the reciprocal of what you normally see. 4x compression is listed as 25% compressed or 400% uncompressed. It lists MB compressed per 100 MB uncompressed.

Great article! This answered a lot of questions for me. Will probably be using xz in the future :) Thanks!

Looks like an interesting read with regards to why NOT to use ‘xz’. Note this may be biased, I did not read it.

https://www.nongnu.org/lzip/xz_inadequate.html

How can I get the compression speed for each level of compression in gzip?

can u update your test with PIGZ as gzip compression replacement?

Good article.

Suggesting one improvement: add a chart that will show the 3 method modes overall performance.

X scale should be time, y scale should be compression ration.

Will allow understanding trade off between options

If you are managing a large consumer-facing website, I would not follow the recommendation at the end of the article. You will want to compress all of your log files on all your servers “as fast as possible.” If space is an issue, then keep fewer local log files! You should only be keeping enough local log files for quick diagnostics during short network outages. Logs data should be pushed to remote systems with services like syslog, datadog, splunk, etc., for long-term retention and analysis.

Standalone servers are rarely “idle”. I personally had an issue with batch jobs not completing in time because the log file compression jobs were hogging the CPU.

good